Qwen3: Think Deeper, Act Faster

Qwen3 introduces hybrid thinking AI with powerful reasoning capabilities, supporting 119 languages and featuring MoE architecture for unprecedented efficiency.

🚀 Advanced hybrid reasoning and 128K context window

What is Qwen3

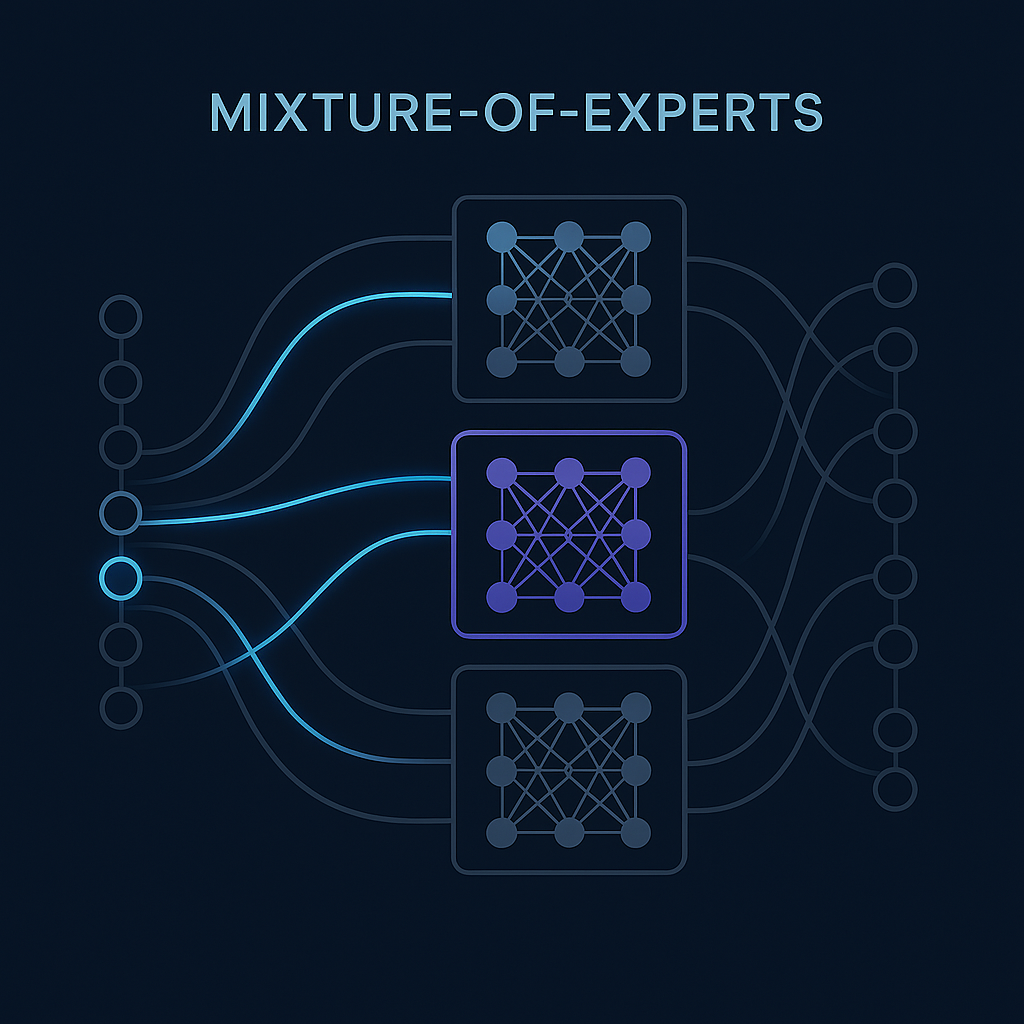

Qwen3 is our latest family of large language models, featuring MoE architecture that revolutionizes AI by combining advanced reasoning with efficient processing. Trained on 36 trillion tokens, Qwen3 delivers exceptional performance across coding, mathematics, reasoning, and multilingual tasks.

- Hybrid Thinking ModesSwitch between in-depth reasoning for complex problems and quick responses for simpler tasks, with configurable thinking budget.

- Mixture-of-ExpertsRevolutionary architecture activates only relevant experts for each task, dramatically improving efficiency.

- Extensive Multilingual SupportPowerful capabilities across 119 languages and dialects, from Western European to Southern Asian languages.

Why Choose Qwen3

Experience the future of AI with Qwen3's cutting-edge capabilities, designed to handle complex tasks while maintaining exceptional efficiency.

How to Use Qwen3 Online

Experience Qwen3 instantly through our online platform:

Key Features of Qwen3

Discover the powerful capabilities that make Qwen3 stand out in the world of large language models.

Advanced Pre-training

Trained on 36 trillion tokens covering 119 languages and dialects, with expanded knowledge from web and PDF-like documents.

Four-Stage Training

Developed through long chain-of-thought cold start, reasoning-based RL, thinking mode fusion, and general RL to create a versatile AI system.

Robust Model Family

Eight models ranging from 0.6B to 235B parameters, including two efficient MoE models that reduce both training and inference costs.

Extended Context Length

Up to 128K token context length for complex document processing and analysis with no blind spots.

Benchmark Excellence

Superior performance in tasks like Arena-Hard, LiveBench, LiveCodeBench, GPQA-Diamond, and MMLU-Pro.

AI-Ready Deployment

Pre-configured for easy deployment with frameworks like SGLang, vLLM, and compatible with OpenAI-like endpoints.

Qwen3 Performance

Delivering state-of-the-art results across key benchmarks.

Language Support

119

Languages

Training Data

36T

Tokens

Context Length

128K

Tokens

What Users Say About Qwen3

Hear from developers and researchers who have integrated Qwen3 into their projects and workflows.

David Chen

AI Researcher

Qwen3's hybrid thinking modes have revolutionized our research workflow. The ability to configure thinking budgets gives us unprecedented control over the balance between performance and efficiency.

Rachel Kim

Software Developer

The MoE architecture in Qwen3 delivers exceptional performance at a fraction of the computational cost. We've been able to deploy sophisticated AI capabilities that were previously out of reach for our organization.

Michael Johnson

NLP Engineer

Qwen3's support for 119 languages has been a game-changer for our multilingual applications. The model handles complex translation tasks and cross-lingual understanding with remarkable accuracy.

Sofia Garcia

Research Scientist

The four-stage training process produces models that excel at both reasoning and quick responses. We can dynamically switch between thinking modes depending on the complexity of the task.

James Wilson

ML Engineer

Qwen3's infrastructure is rock-solid. We deployed our application using vLLM and created an OpenAI-compatible endpoint in just hours. Best investment for our AI research team.

Anna Zhang

AI Application Developer

The improvement in reasoning capabilities is incredible. Qwen3 has solved complex math problems that other models simply couldn't handle, and it does so with clear step-by-step thinking.

Frequently Asked Questions About Qwen3

Find answers to common questions about using and deploying Qwen3 models.

What makes Qwen3 different from other large language models?

Qwen3 introduces hybrid thinking modes, allowing models to switch between deep reasoning and quick responses. Combined with MoE architecture, this delivers exceptional performance with lower computational requirements. The models also support 119 languages and feature extended context lengths up to 128K tokens.

How can I control the thinking modes in Qwen3?

You can control Qwen3's thinking modes through the 'enable_thinking' parameter when using the models. Setting it to True enables in-depth reasoning, while False provides quicker responses. You can also use '/think' and '/no_think' commands within prompts to dynamically switch between modes during multi-turn conversations.

What types of tasks can I build with Qwen3?

Qwen3 supports a wide range of AI applications, from content generation to complex reasoning tasks. The models excel at coding, mathematics, logical reasoning, and multilingual translation, making them suitable for chatbots, research assistants, creative writing tools, and more.

What deployment options are available for Qwen3?

Qwen3 models can be deployed using frameworks like SGLang and vLLM to create OpenAI-compatible API endpoints. For local usage, you can use tools like Ollama, LMStudio, MLX, llama.cpp, or KTransformers. All models are available for download from Hugging Face, ModelScope, and Kaggle under the Apache 2.0 license.

What hardware is needed to run Qwen3 models?

Hardware requirements depend on the model size. For MoE models like Qwen3-235B-A22B, you'll need significant GPU resources, though they're more efficient than dense models of comparable performance. Smaller models like Qwen3-0.6B and Qwen3-1.7B can run on consumer hardware with lower GPU memory requirements.

What is the license for Qwen3 models?

All Qwen3 models are available under the Apache 2.0 license, which allows for both commercial and non-commercial use, modification, and distribution, providing flexibility for researchers and businesses.

Ready to Experience the Power of Qwen3?

Start building with our state-of-the-art large language models today.